[From Fast Company‘s Co.Design site, where the article includes more images and two videos]

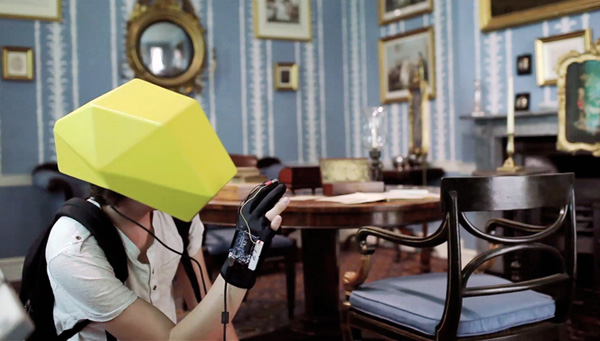

HyperReality Helmet Uses Kinect To Create An Out-Of-Body Experience

Maxence Parache’s experimental augmented-reality system lets you detach your point of view from your body.

July 28, 2011

We take our first-person visual perspective for granted every second of the day — we have to, because our eyeballs are attached to our heads. But what if you could detach your personal “camera angle” at any moment and float away from your own body while still inhabiting it, like an on-demand out-of-body experience? Designer Maxence Paranche has created the next best thing in his HyperReality system, which uses a Microsoft Kinect to scan your physical environment and display it inside a virtual-reality helmet, so you can rotate the visual angle any way you like.

Granted, the visual display inside that weird yellow helmet isn’t exactly Tron-quality: your local environment is rendered as an array of monochrome dots. And rotating a camera angle separate from the virtual “body” you inhabit is something that video gamers (and Second Life enthusiasts) do all the time. The interesting thing about HyperReality is how it combines these two interfaces into one, physically embodied experience. Using an Arduino-powered glove equipped with force sensors, “the user is able to rotate the 3D view around the virtual (scanned) environment, change his point of view and enable new behaviors,” Paranche tells Co.Design via email. In other words, you’re still able to physically interact with all the “real stuff” around you, but you can also pan your “mind’s eye” around the scene separate from your own body, just like you would in a video game.

In fact, you have to — the Kinect-powered visual display is so coarse that “the user needs to activate the 3D rotation to give some depth to objects and be able to appreciate the notion of distance in space,” Paranche explains. “This vision is a bit constraining at the beginning, [but] in my opinion a digital experience trying to enable new behaviors has to be constraining for the user.” You wouldn’t expect a radical upgrade to your physical sensorium to feel easy, now would you?

Paranche sees HyperReality being useful for fine art galleries who want to offer visitors a way to experience exhibits in an augmented way. He’s already done test runs at the Geffrye Museum and The Last Tuesday Society. “The museum is experienced as a whole, as the user is navigating from the large-scaled architecture of the rooms to the smallest pieces of art thanks to this virtual vision,” he says. “Behaviours are being modified, the notion of scale is being distorted, all this pushing the boundaries of the physical space.” Hey, it sure beats those lame guided audio tours.

—

John Pavlus is a writer and film… Read more