[This looks like another step toward effective mobile/wearable augmented reality (I like the heading “Mixing Virtual Reality and Reality Reality”)… The article is from ReadWrite, where there’s a 0:41 minute video; more coverage, including more videos, is available from 3D Printing Industry. –Matthew]

Here’s A New Way To Step Into A Virtual World

Occipital’s mobile 3D-scanning sensor goes virtual.

Signe Brewster

Mar 27, 2015

When you strap on an Oculus Rift virtual-reality headset, you’re free to look up, down and around. But as soon as you try to explore the virtual world further, you’re stuck. You can’t interact with your surroundings or walk across the room.

New controllers and sensors hitting the market are built to solve this problem, whether by tracking the precise location of your fingers so you can grab that virtual gun or giving you a simple joystick so you can “walk” from place to place. The HTC Vive, one of the highest-profile new headsets, lets you move around a real room and incorporates your motion into VR.

The startup Occipital thinks there’s a simpler way. Up until today, its candy-bar-shaped Structure Sensor, an accessory for mobile devices, has mostly been used for 3D scanning of physical objects—for instance, in order to create 3D-printable virtual models. Now, though, Occipital wants to expand into virtual and augmented reality by giving its sensor the ability to map entire rooms and incorporate a user’s actual movement onto a screen, and thus into a virtual world.

Mixing Virtual Reality And Reality Reality

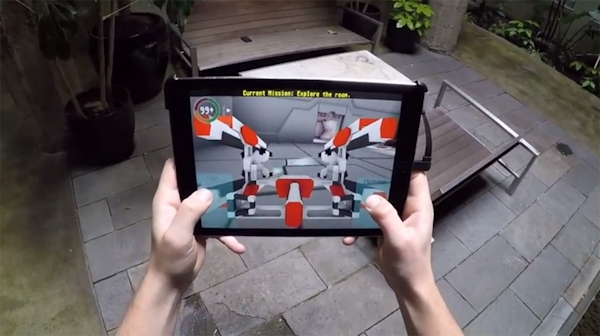

At the Occipital office in San Francisco’s Mission Bay neighborhood, I recently rambled around with an iPad in my hands and a Structure Sensor strapped to its back. On its screen, I explored a Portal-esque room in hopes of opening a door to move on to the next level. I noticed a laser crossing the room; blocking it would open the door. But to do so I needed a few of the cubes circulating on a line by the ceiling.

I walked over to a coffee machine in the game, which is called S.T.A.R. Ops, by actually walking down the long row of desks in the Occipital office. I moved through the virtual room in much the same way. I tapped one corner of the screen to grab a coffee cup and moved the tablet away from my body as if I was sticking the cup into the machine. Coffee poured in.

I powered up a nearby gun by tipping the iPad to pour the coffee into a grate. I shot down some cubes and then stacked them in front of the laser, the iPad once again serving as a physical representation of the blocks. The door opened.

It’s a funny mix of the virtual and real worlds. Most virtual reality experiences are seated and don’t incorporate the tipping and reaching motions S.T.A.R. Ops calls for. While the movements are fairly intuitive, it takes a while to get used to them. But the learning curve is quick—on my second run through the level, I cut my time by two thirds.

Positional Tracking Gone Wild

The Structure Sensor works by projecting infrared dots across everything in a room. It can sense depth and motion based on the dots’ behavior and build a map of them that updates at 30 frames per second. Occipital calls it “unbounded positional tracking.”

There are lots of sensor systems already available in the virtual reality space. Many, like Leap Motion, are more focused on hand tracking—an area with which Occipital is not currently concerned. CEO Jeff Powers related it more to the Kinect sensor, which VR companies have been hacking to incorporate into their demos, except that the Structure Sensor doesn’t need any tricky setup to be used with iPads, iPhones and Android devices.

Powers noted that high-end VR headsets like the HTC Vive and Oculus Rift also use sensors to incorporate movement, and said he believes sensors incorporated directly into the VR device are the only way to go. Though the Structure Sensor doesn’t currently deliver the precise hand tracking that Vive does, it allows users to move beyond a predetermined area if they want to walk around in a virtual world.

Eventually, Powers sees Sensor-like systems being incorporated into our mobile devices. Google’s Project Tango phone will be an early example. But beyond that, he said the ultimate form will be wearable devices that constantly read and make sense of our surroundings. That’s the vision of augmented reality at which Google Glass hinted.

True augmented reality is years, if not decades away. But beginning today, Structure Sensor owners can play S.T.A.R. Ops and think about the virtual-reality experiences they would like to see built in the near-term.