[From Discover’s Magazine’s blog The Crux]

Cheap Soul Teleportation, Coming Soon to a Theater Near You?

April 10th, 2012

Mark Changizi is an evolutionary neurobiologist and director of human cognition at 2AI Labs. He is the author of The Brain from 25000 Feet, The Vision Revolution, and his newest book, Harnessed: How Language and Music Mimicked Nature and Transformed Ape to Man.” Also check out his related commentary on a promotional video for Project Glass, Google’s augmented-reality project.

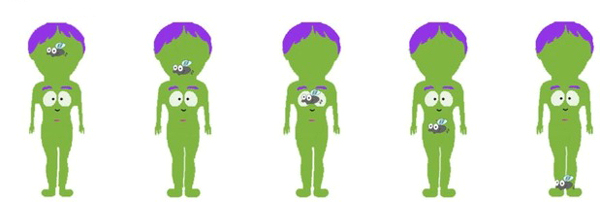

Experience happens here—from my point of view. It could happen over there, or from a viewpoint of an objective nowhere. But instead it happens from the confines of my own body. In fact, it happens from my eyes (or from a viewpoint right between the eyes). That’s where I am. That’s consciousness central—my “soul.” In fact, a recent study by Christina Starmans at Yale showed that children and adults presume that this “soul” lies in the eyes (even when the eyes are positioned, in cartoon characters, in unusual spots like the chest).

The question I wish to raise here is whether we can teleport our soul, and, specifically, how best we might do it. I’ll suggest that we may be able to get near-complete soul teleportation into the movie (or video game) experience, and we can do so with some fairly simple upgrades to the 3D glasses we already wear in movies.

Consider for starters a simple sort of teleportation, the “rubber arm illusion.” If you place your arm under a table out of your view, and have a fake, rubber, arm on the table where your arm usually would be, an experimenter who strokes the rubber arm while simultaneously stroking your real arm on the same spot will trick your brain into believing that the rubber arm is your arm. Your arm—or your arm’s “soul”—has “teleported” from under the table and within your real body into a rubber arm sitting well outside of your body.

It’s the same basic trick to get the rest of the body to transport. If you were to wear a virtual reality suit able to touch you in a variety of spots with actuators, then you can be presented with a virtual experience – a movie-like experience – wherein you can see your virtual body being touched and the bodysuit you’re wearing simultaneously touches your real body in those same spots. Pretty soon your entire body has teleported itself into the virtual body.

And… Yawn, we all know this. We saw James Cameron’s Avatar, after all, which uses this as the premise.

My question here is not whether such self-teleportation is possible, but whether it may be possible to actually do this in theaters and video games. Soon.

The problem with the body teleportation mentioned above is that body suits are expensive, and likely to be so for some time. We’re unlikely to leave theaters and find signs saying, “Please remove your bodysuit and drop it into the bin.” But there may be a much simpler solution, one that concentrates all its teleportation powers directly at the soul itself like a laser beam directed at the eyes.

Before getting to the idea, consider that there were two basic ingredients in the rubber arm illusion:

(i) You need to see the rubber arm as you would see your own arm from a first-person point of view, and you must see something touch that rubber arm, and

(ii) you must feel your own, real arm touched in the same way and same time as the visual touching.

How can we aim this directly at the eyes itself? Restating the two ingredients above, but now for the eyes, we have:

(i) You need to see the virtual character’s eyes as you would see your own eyes from a first-person point of view, and you must see something touch those eyes, and

(ii) you must feel your own, real eyes touched in the same way and same time as the visual touching.

We’re getting closer to my actual suggestion, but there remains a serious problem here: movie-goers do not want their eyeballs touched! (And, at any rate, it is unlikely that the on-screen virtual character would like his or her eyeball touched either.)

The eyeballs may be off-limits for touching, but the parts of the face most proximal to the eyes are not. It’s not widely appreciated, but we see parts of our own faces at the borders of our visual perception. Most obviously we see our nose—it’s to the left of our right eye’s view, and to the right of our left eye’s view. But we also see our brows above, and our cheeks below. We’re not consciously aware of this most of the time, but the point is implicitly understood by athletes who place black paint on their upper cheeks to prevent sun glare, which is only sensible if those parts of the cheeks are within the visual field.

This view of your own face in the periphery of your vision is, I suggest, the key to pinpoint-soul teleportation. With respect to the two ingredients of teleportation, we can now say:

(i) You need to see the virtual character’s facial regions near the eyes as you would see your own face from a first-person point of view, and you must see something touch those face regions, and

(ii) you must feel your own, real face touched in the same way and same time as the visual touching.

That can be done. Just show the on-screen character’s face at the periphery of the screen, just as it’s in the periphery of your own view of the world. And, when the character’s face is touched, make sure that the movie-goer’s (or video-game player’s) face gets touched as well. When the face around the eyes gets transported, the eyes will get transported along with it. And no eye-touching is required.

Still, there seems to be a downside here as well: The movie has to place the on-screen character’s face, viewed from his or her perspective, onto the screen. Place a nose, brows and cheeks around the screen? That would seem to block some of the view of the movie!

In reality, though, the views of our own face are far in the periphery, and need not block much of the movie screen. But, more importantly, most of the parts of our face that we can see are within our binocular field, and within that region we can see through our own face as if it is semi-transparent. For example, at this very moment you can see your nose (and even see it from both sides simultaneously), and yet it doesn’t block your view of the world out there because what your left eye misses, your right eye sees, and vice versa. Your view of your own nose, as I discuss in my book, The Vision Revolution, is rendered as partially transparent, allowing you to see it and simultaneously see through it.

In order to carry off this see-through-your-own-face trick on screen, we need the movie to be in 3D, or, more specifically, to use our binocular vision. That way your left eye can be fed a view from the character’s left eye’s, including the view of the left side of his or her face (e.g., the left side of his or her nose placed on the right side of the left eye’s visual field), and the analogous view for the right eye.

The viewer in the audience will thereby see the character’s eye-proximal face within his visual field (and see through it), and this “rubber face” can be stroked within the movie (by passing branches, or by the character’s own self-touching, or any other means), and this satisfies the first of the two ingredients for teleportation.

We’re part way there. All that we need now is a way to touch the viewer in the audience on the face when the on-screen character’s face is being touched.

This is where the stars align.

Whereas bodysuits with actuators were unreasonable to expect for theaters, now we’re especially interested in touching the regions of the face the eyes can actually see. And, notice that we’re already in need of 3D glasses in order to appropriately see the character’s face—and most movies nowadays already have us wear 3D glasses anyway. Glasses frames tend to straddle those regions of your face your eyes can see, namely the brows, upper cheeks, and nose. When you wear 3D glasses at the theater, your face (and, namely, the regions of your face that your eyes can see) is already wearing its own bodysuit.

All that we need to do, then, is to upgrade 3D glasses frames so that they can touch these parts of the face when the on-screen character’s face is touched. Some slight modifications in the shape of the frame might be needed to get them closer to the face, and actuators must be placed at points along the frame, able to wirelessly activate in synchrony with the visible face-touching on screen.

Who needs an exorbitant suit of activators when a cheapish pair of glasses might suffice?! …and I’m hoping that this proposal serves as a call to the movie and video-game industry to give it a try.